A wave of technology has washed over the academic world, triggering questions that sound more like plot lines from a futuristic movie. Today, every lecturer faces a critical decision: Should artificial intelligence be allowed in student assessments?

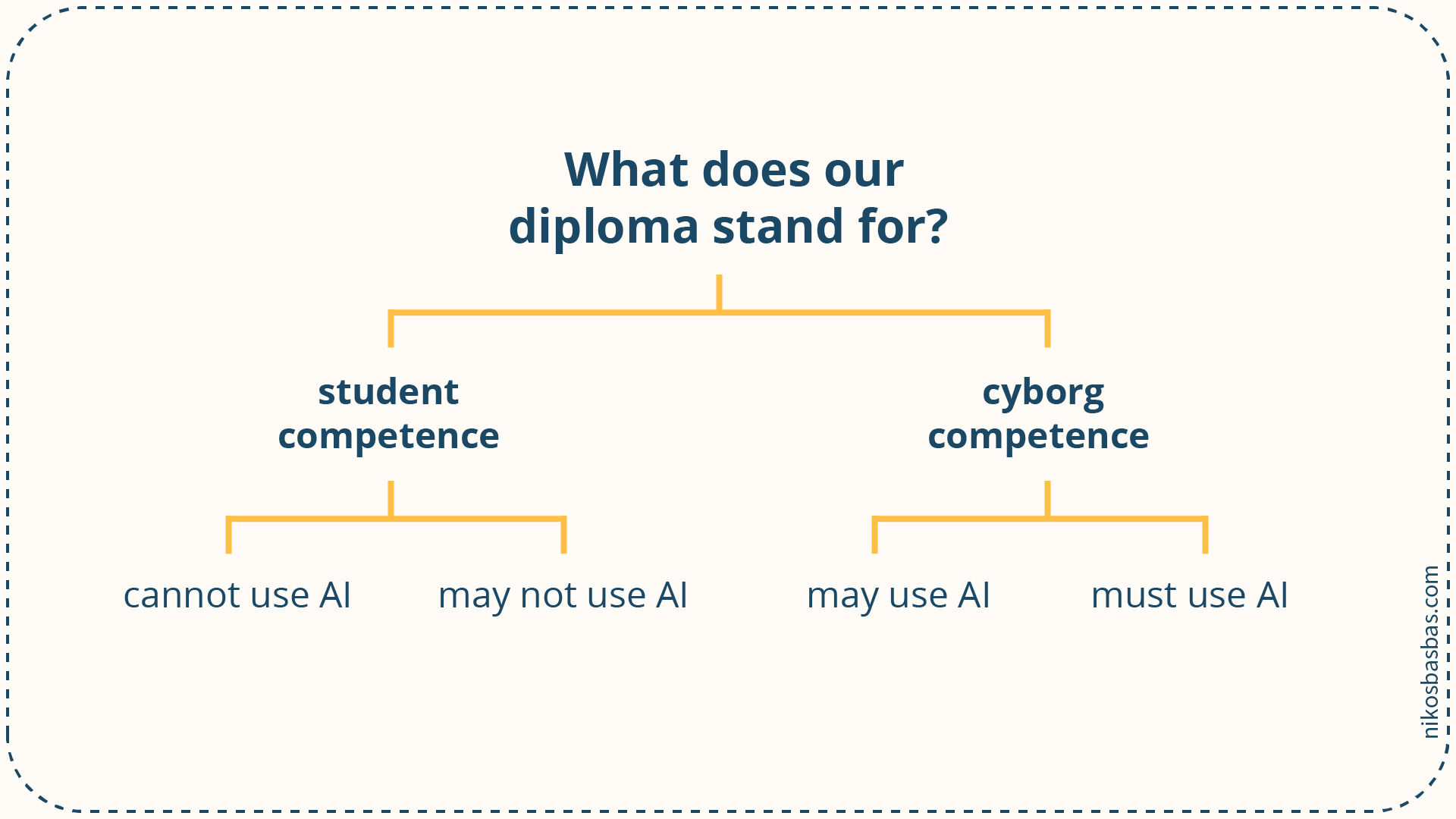

Our higher education diplomas aren't just pieces of paper. They're passports to professional realms, stamps of approval from academic gatekeepers signifying we are equipped with certain skills. The question then becomes, what exactly are these skills in the age of AI? Is the purpose of a university degree to validate purely human skills - our innate, raw intelligence unaided by technology? Or does it certify our 'cyborg' skills, our ability to blend our cognitive abilities with the immense power of AI tools?

Let's resolve this question by examining four possible policies universities could adopt concerning AI, specifically large language models (LLMs) such as ChatGPT.

The Purely Human Route

Policy 1: LLMs Strictly Off-Limits

To enforce this policy, universities would need to create a controlled environment, such as traditional classrooms and examination halls, complete with diligent proctors and good old pen-and-paper assessments. Exams, essays, presentations, computing problems - they all work here.

Policy 2: LLMs Not Allowed

This policy would be ideal for universities that lack the resources to create controlled environments or when the assessment requires more than a few hours, like a thesis or a research project. The responsibility here is to design tasks that students can only complete by themselves, focusing more on the process rather than just the outcome. And perhaps most importantly, to work with students so they do not want to use LLMs for this task.

The Cyborg Path

Policy 3: LLMs A Must

This policy would entail requiring students to use AI tools as part of their coursework. It sounds futuristic, but it also means ensuring equal and ethical access to these tools and teaching students how to use them properly.

Policy 4: LLMs Optional

An unrestricted approach. Universities would neither enforce nor discourage the use of AI. It’s all about giving students a problem and letting them figure out the best tools to solve it.

Our current educational system already is a blend of these strategies. Sometimes students are required to turn up on campus for a paper-and-pencil exam; other times they're free to use any digital tool at their disposal. But AI has upped the ante. It's no longer just about spell-checking an essay; AI can now practically write that essay for them.

So, what's the ideal approach in this new world?

Balance could be our guiding principle here. We need to continue to appreciate and assess the unique human skills our students possess, especially since they will be overseeing AI's contributions in their future roles. At the same time, we must remember that higher education is designed to produce adaptive thinkers rather than just fact repositories. So it becomes crucial that we equip our students with the skills to harness AI, much like an artist mastering a new tool. As we journey into the future, our students may not be replaced by AI, but those capable of effectively using AI may very well outshine their counterparts.

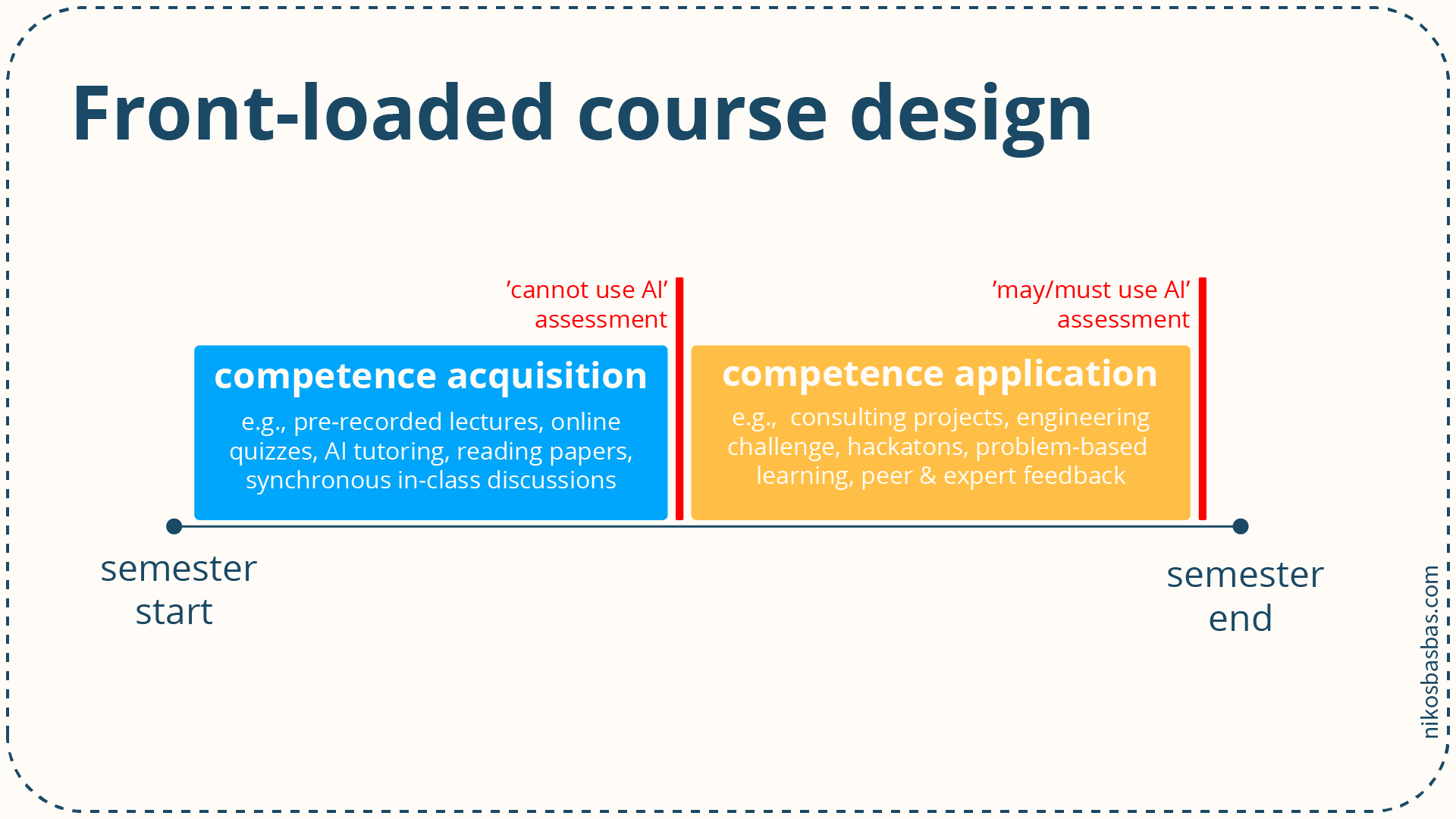

In more practical terms, consider the idea of a 'front-loaded course design.' In the first part of the course, we focus on raw knowledge transfer, ending with an on-campus exam to test the student's grasp of the core concepts. Then, in the second half, we change our approach and let the students grapple with real-world problems, using all tools at their disposal, including AI.

In short, it's not about choosing between human brilliance and cyborg capabilities. The future belongs to those who can masterfully wield both. So, let's assess accordingly.

This blog post was made possible with the help of Claudia Böck, Nadia Klijn, Frea van Dooremaal, Chiara Baldo, ChatGPT (GPT-4), and Midjourney.